Future foundations

In the government’s recent integrated review, a task force was announced to bring together government and industry to develop the UK’s AI (Artificial Intelligence) capabilities with a particular focus on foundation models and large-language models. In this article we will explain the basics of foundation models, how they can be used, and where Roke is working in this field.

Foundational AI

The term “Foundation Model” appears in a Stanford report “On the Opportunities and Risks of Foundation Models.” The term Foundation Model refers to (massively) large AI models, such as those underpinning Chat GPT that are currently generating so much interest in the media. Companies are using phenomenal volumes of data from the web to train massive machine-learning models with minimal human supervision. These models are used to answer questions, author essays, and perform other general tasks. The fundamental idea is that they gain a general understanding of the patterns underpinning language, images, audio, videos, etc. Having acquired this general knowledge, it is possible to adapt these models and layer on top specialised applications that perform more specific tasks (such as intelligence analytics).

The theory is that it is easier to adapt these general, Foundational Models than to develop specialised models from scratch. However, if most AI algorithms used in future are based on just a few especially large models that have been fine tuned for specific tasks, the danger is that any fault or flaw in the underlying model propagates through any model produced from the original. Methods for planting undetectable backdoors within machine-learned models already exist that can work at a very fine-grained level, targeting single data points.

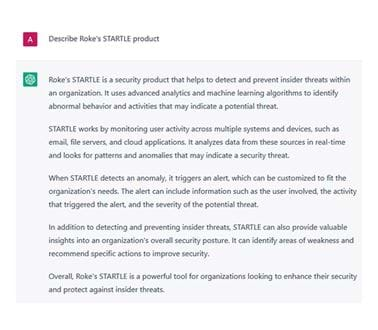

The other major challenge from large models that aim to perform well in general tasking is they can perform poorly when they are tasked outside of their usual domain without fine tuning. The most dangerous aspect of this is that, at present, many models attempt to perform as usual and will confidently provide a wrong answer that can sound plausible, with no indications of their level of confidence! There are many examples of this already, but we tested it by asking it to explain our AI product, STARTLE. The answer shows that while it knows STARTLE uses machine learning and can be used for network protection and monitoring, it has focused heavily on insider threat and completely misses the core aspect of STARTLE; the biologically-inspired explainable algorithm.

Assurance, ethics, and regulation

For the government AI task force to be a success and take the UK forward with a sovereign foundation model, we must first provide a clear strategy for assuring and regulating AI and data, including the pro-innovation approach to AI regulation. It must address people’s right to privacy, the development and use of AI with regards to ethics, demonstrable assurance principles, and sustainability. There is a continual arms race between developing AI models for routine tasks, and AI that in turn aims to fool or disrupt other models. AI models must therefore be managed like any other product or service.

- The full lifecycle costs must be understood and monitored (including climate impact)

- Continual assessment for bias or vulnerabilities followed by patching according to urgency

- Terms of use and regulation

Of course, all of this could be made simpler with foundation models! Assessing just a handful of models but to a particularly fine degree could make maintaining the specialised versions much easier, rather than having to repeat the whole assurance process each time, though by using large and (potentially) ‘black-box’ models, we may make the assurance process more complicated if the base assurance model is not clear.

Similarly, we do not yet know whether the carbon footprint of training just a few exceptionally large models and then fine-tuning those is greater or smaller than training many small models from scratch.

All of this requires a significant investment in MLOps – the management and use of machine-learned models in development and operation. By defining suitable MLOps principles, we can:

- Integrate security as part of an automated assessment stage in the development and sustainment of models

- Repeatably and quantitatively assess and compare models

- Rapidly release new versions of existing models

The BBC Reith lectures in 2021 focused on “Living with Artificial Intelligence (AI)” and the world has certainly taken a step in that direction as the likes of GPT-4 become the core backend to Microsoft’s search engine, Bing, and make BBC headlines. As a nation, we have a moral obligation to continue investing in these capabilities that can save and improve lives across the world. This capability continues to be democratised and can easily be spun up by just a small team, and anyone with an internet connection can try GPT-4 and use its outputs for free.

What next?

Globally-agreed regulations and ethics will need to be debated but cannot be relied upon alone. Like with offensive and defensive cyber capabilities, we must research and experiment with both offensive and defensive AI to protect everyone from future threats.

Roke has a historic interest in developing and maintaining AI systems as well as understanding their vulnerabilities, from our STARTLE® system that detects threats and informs the human in the loop of any anomalous behaviour, to the development of adversarial patches to fool image recognition algorithms.

We have made use of foundation models to demonstrate the art of the possible as well as their vulnerabilities, often fine-tuning these models to deliver niche capabilities to our clients. We continue to invest in our internal ethics processes and engage with academia to ensure our approach is informed and innovative.

In addition, our history in robotics has enabled us to work with the latest technology available such as the Boston Dynamics Spot and Clearpath. Our aim is to bring AI to a heterogeneous range of robotics that could then act autonomously as a squad to undertake missions. We believe that the next key foundational model needs to bring together the specialisms in robotics, AI, and cyber, to deliver capability that can operate across the physical and cyber space.

Related news, insights and innovations

Find out more about our cutting-edge expertise.