The Challenge

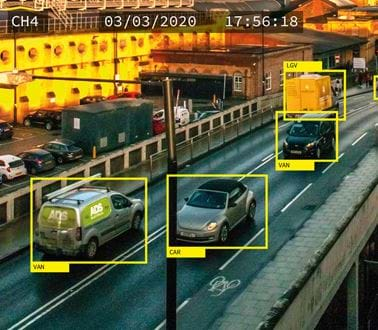

Many organisations are reaping the rewards of AI/machine learning systems but are increasingly concerned about potential cyber threats directed at AI components. We were tasked to assess the ease with which adversarial techniques could be used to ‘fool’ AI systems used in applications such as video surveillance.

The Approach

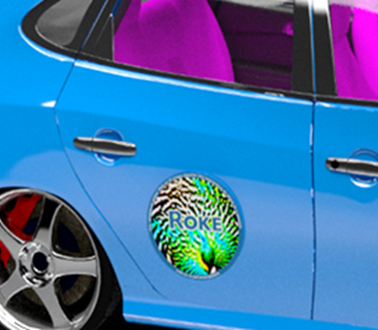

We developed adversarial patches – 2D images to be attached to the flat surface of a 3D object. These patches were able to fool AI image classifiers, creating false-positives by placement on roads, attachment to walls, display on clothing or on accessories such as bags/hats.

THE OUTCOME

We provided demonstrations that illustrate adversarial patch attacks in the customer’s operational environment. We’ve since further developed concepts to build other adversarial attacks and novel defence measures, all operating within real-world environments and the associated cyber threats.

Related news, insights and innovations

Find out more about our cutting-edge expertise.