The Challenge

Machine Learning (ML) systems are an increasing commonality throughout industry, public and private, with applications including aircraft engine inspection and self-driving cars. Standard ML systems are often used in conjunction with Artificial Intelligence (AI) technology to build a model based on gathered data which is used to train the system to make future predictions.

However, malicious actors have learnt ways in which to exploit ML algorithms to cause issues for system owners. Examples include tricking self-driving cars into reading incorrect speed limit signs and deceiving face and object detection systems to provide incorrect readings.

The complex nature of ML technology means that many developers inadvertently leave security flaws within systems which can lead to exploitation by attackers. Some examples of common flaws which lead to increased cyber security risks include; the retraining of models via user input, models being open to reverse engineering and data from unknown sources being used for a model.

The National Cyber Security Centre (NCSC) identified a need to develop a set of easy to understand principles to ensure developers can use and create ML models safely and securely. In an effort to triage the threat landscape, Roke was tasked by the NCSC to work collaboratively with its Security of ML team.

The Approach

As part of delivering this task, we deployed an ML subject matter expert, Kirt, to the NCSC in order to provide direct, daily support. Kirt was able to apply his own knowledge, but also utilise our expertise in other relevant capabilities including high assurance systems and Software Reverse Engineering (SRE). This approach allowed a variety of ways of thinking and specialisms to come together to research where threats could come from.

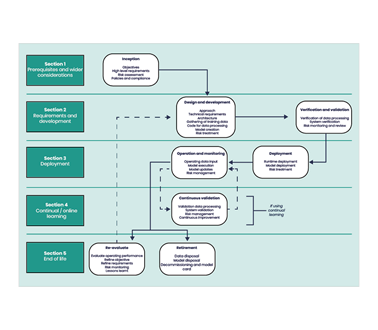

Once we understood the threat landscape, we mapped where different types of attacks needed access in the ML lifecycle. This allowed us to deploy fake malicious actors, or a red team, to attack a generic ML workflow and understand which parts of a model are most vulnerable.

The outcome of the red teaming was a visual representation of which areas in the ML lifecycle are most vulnerable to unique attacks which are specific to ML systems. The result of this research indicated that many ML attacks, while different in nature, require access to the same vulnerabilities in the lifecycle – therefore if we could protect these vulnerable areas we would be able to protect against a large range of attacks.

THE OUTCOME

Applying the results of our research, we developed a set of ML security principles that would focus on protecting and defending the identified vulnerable areas. This provides developers with the means to pre-emptively prevent attackers from gaining access to weak links in ML algorithms.

Using our understanding of how the underlying attacks work, we tailored each principle to highlight how to mitigate a possible vulnerability in the lifecycle and a list of attacks that could exploit that vulnerability.

We then applied the principles to our work in other areas of Roke in order to test the security principles against real world use cases, including training AI to recognise cancerous growths from CT scans. Using these principles, the NCSC is now working with other organisations to ensure that they are able to develop safe and secure ML models.

Talk to the experts

Interested in machine learning or our other capabilities? Talk to an expert.

Related news, insights and innovations

Find out more about our cutting-edge expertise.